Keycloak & Open Shift

Hi there!

So. You're running Open Shift Container Platform 4.12+ and you're wanting to deploy that shiny new Red Hat Keycloak Operator (v22) and set up Oauth from Keycloak into Open Shift.

How do you deploy Keycloak as an IDP for Open Shift? The magic words being "Configure Keycloak as an IDP for Open Shift" [hashtag seo].

Well, let us talk about that.

Is it straightforward? Sort of.

Well documented? No.

Let's fix that.

First off, some assumptions:

- Lets assume you are paying for Red Hat and have access to the Operators

- I'll add some "here's how to do it without operators" options, but I'm 99% sure, if you're running Open Shift, you're in the RH ecosystem.

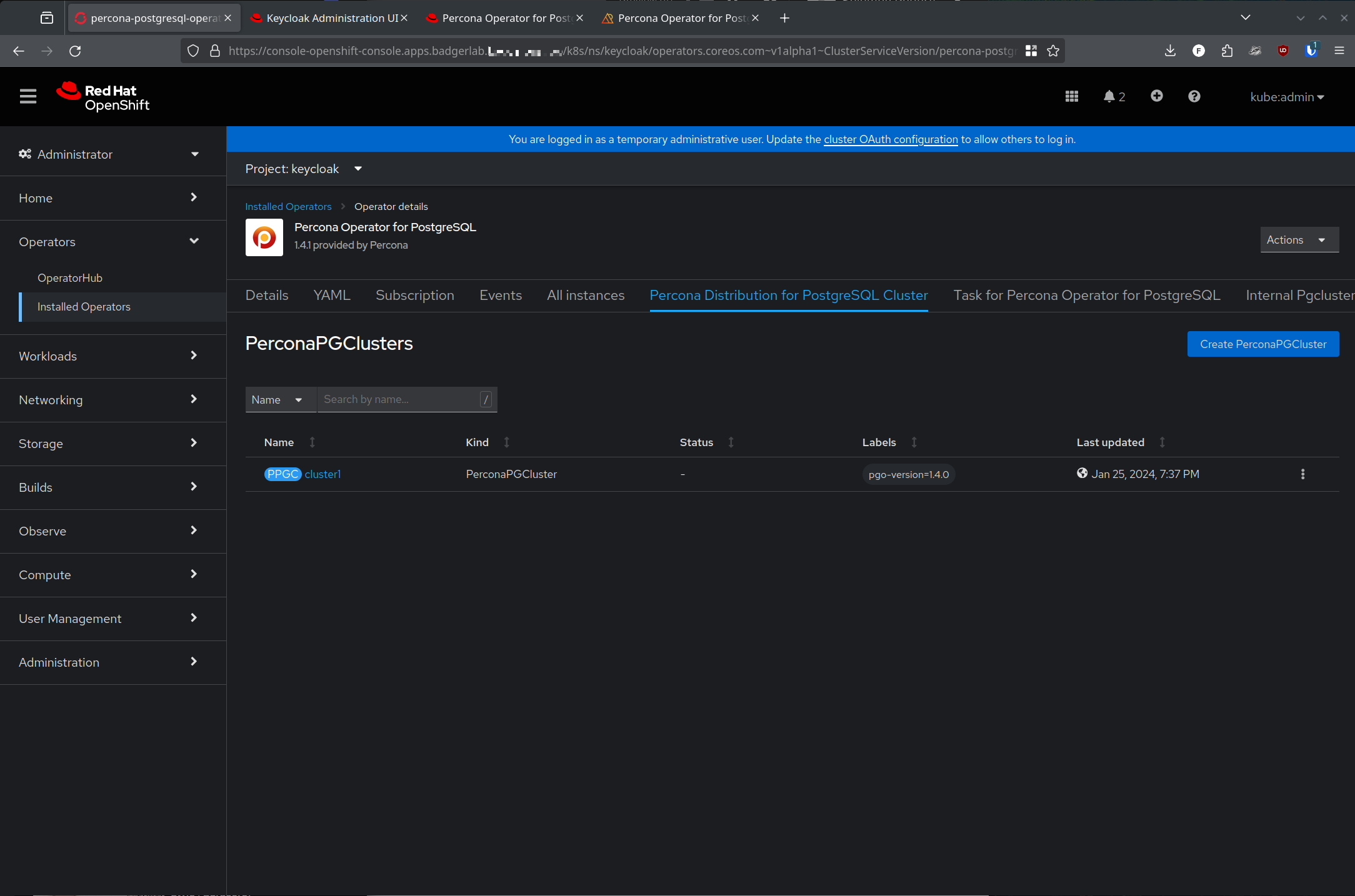

- Lets assume you're deploying a standalone PostgreSQL DB, or cluster - I used the excellent Percona Operator for PostgreSQL.

- NOTE: The "Red Hat Certified" version of the operator is

2.3.1as of this writing.

- NOTE: The "Red Hat Certified" version of the operator is

- Finally, I'm going to assume that you know how to click "install" on the operator page, so I'm not going to walk you through that step by step.

- If you don't want all the preamble, skip down to the "Configuring Oauth & Groups" section because that is what you're likely stuck on.

Create yourself a namespace, er, I mean project - I used keycloak since I am a super creative individual. In that namespace, deploy your PostgreSQL DB in whichever manner you choose - I am using the above mentioned Percona Operator, and took all the defaults when deploying the PerconaPGCluster using the "create PerconaPGCluster" button.

Go grab a cup of coffee, tea or $beverage, it'll take a few minutes for everything to deploy.

If you're not into the operator/PostgreSQL cluster thing, then you can just deploy an ephemeral PostgreSQL DB following the Keycloak.org guide here

Next, install the Red Hat build of Keycloak v22 operator into the namespace.

After that install is complete, we'll deploy the Keycloak instance.

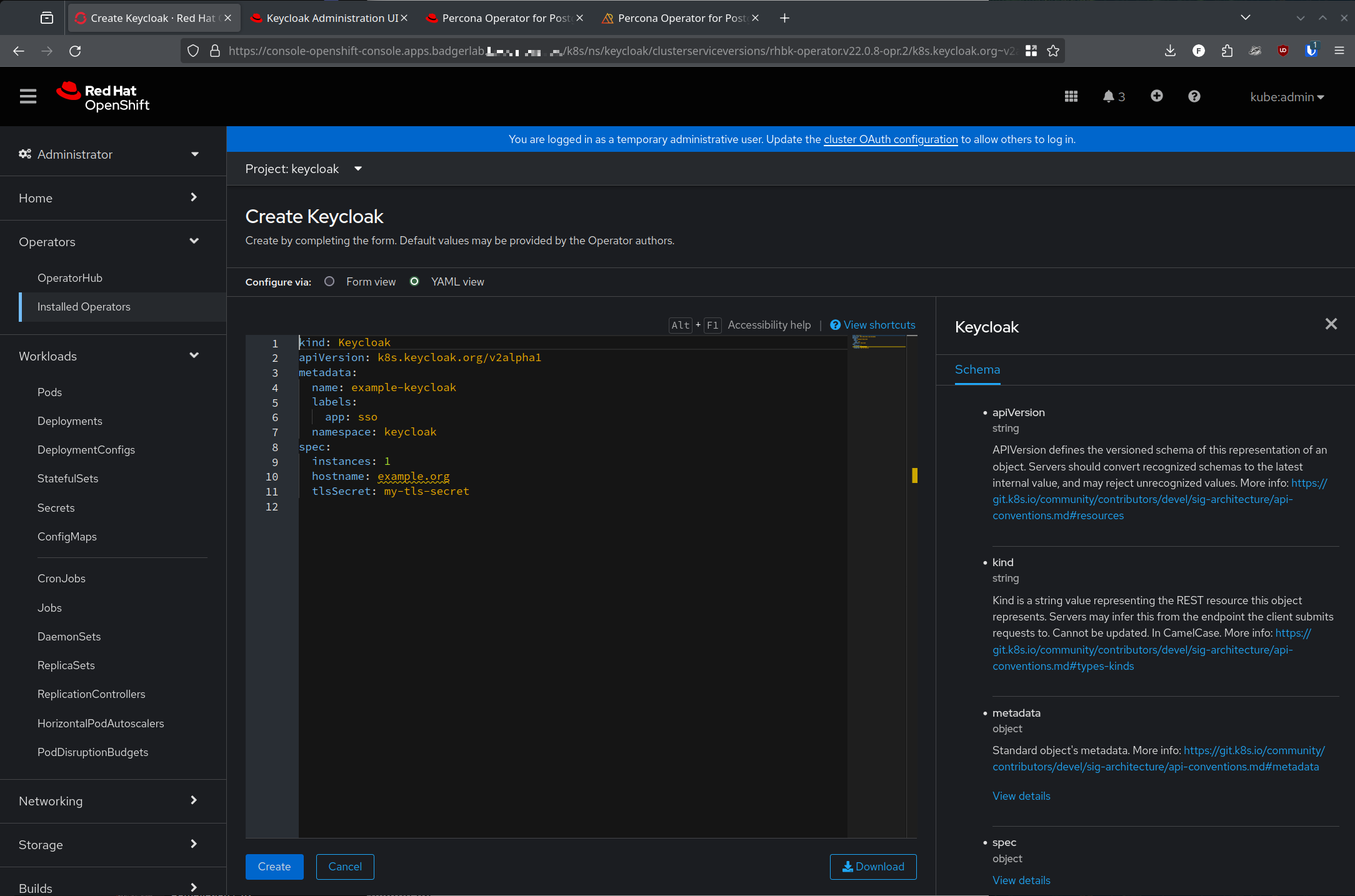

Note: as of the time of this writing the operator default yaml is incorrectly formatted. I pushed a bugfix to the upstream github repo to fix the structure, but it hasn't made it down to the Red Hat build yet.

Wrong structure:

kind: Keycloak

apiVersion: k8s.keycloak.org/v2alpha1

metadata:

name: example-keycloak

labels:

app: sso

namespace: keycloak

spec:

instances: 1

hostname: example.org

tlsSecret: my-tls-secretCorrect structure:

kind: Keycloak

apiVersion: k8s.keycloak.org/v2alpha1

metadata:

name: example-keycloak

labels:

app: sso

namespace: keycloak

spec:

instances: 1

hostname:

hostname: example.org

http:

tlsSecret: my-tls-secretBoth the hostname and tlsSecret blocks are incorrectly formatted, which will result in a failed Keycloak instance deployment.

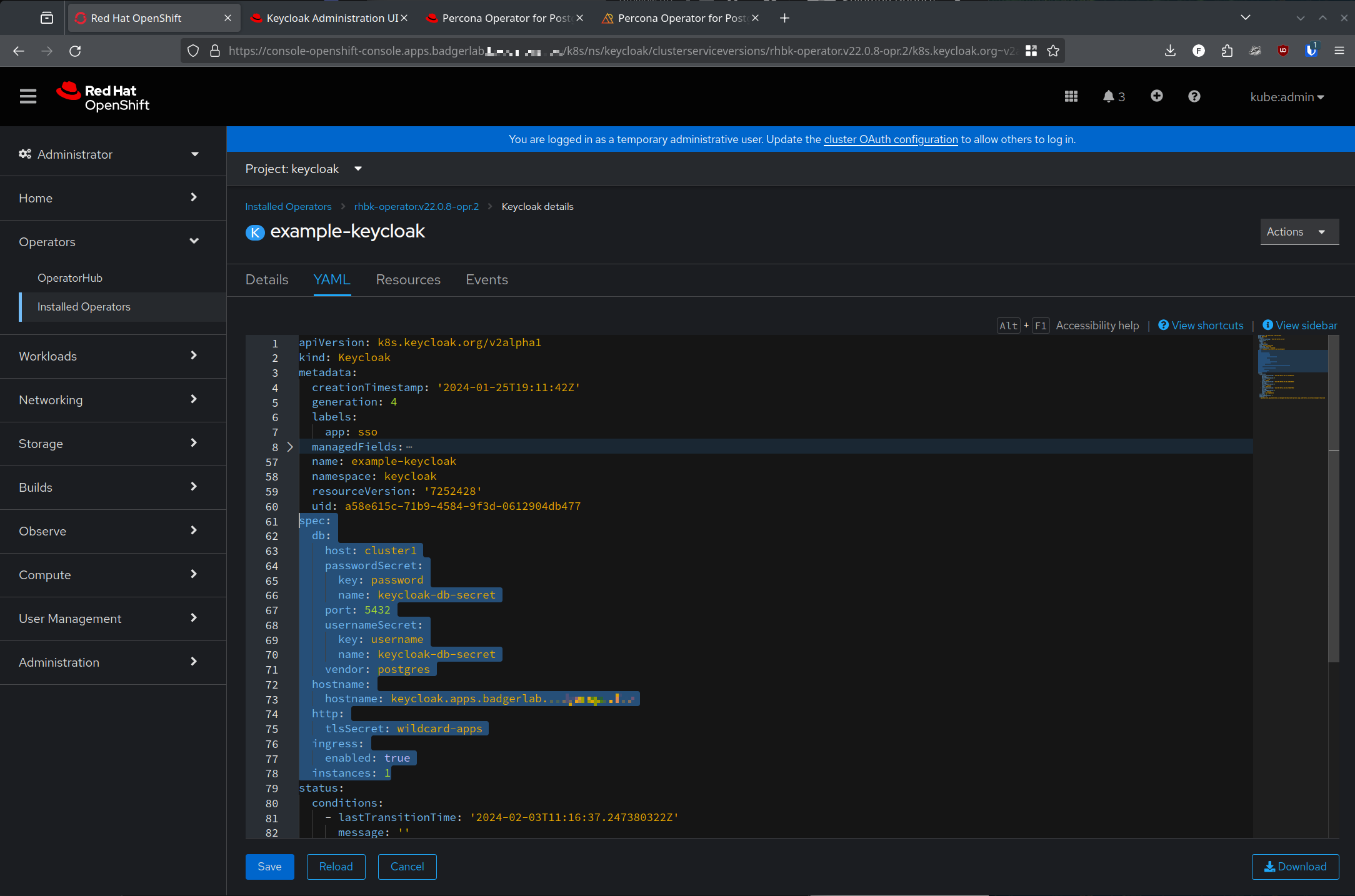

Here is a screenshot of correctly configured yaml for my Keycloak deployment:

Now, this is actually an incorrect deployment! I'm going to dig into that in another blog post since it is a separate issue, but re-using the *.apps.<cluster>.<domain> certificate and ingress will result in a weird issue where sometimes console.apps.<cluster>.<domain> traffic will get sent to the Keycloak service/pod due to http/2 connection reuse getting confused with the console route and the Keycloak route.

Also, as annotated in https://docs.openshift.com/container-platform/4.12/networking/ingress-operator.html#nw-http2-haproxy_configuring-ingress we see the 'correct' workaround is to have a completely separate certificate used:

To enable the use of HTTP/2 for the connection from the client to HAProxy, a route must specify a custom certificate. A route that uses the default certificate cannot use HTTP/2. This restriction is necessary to avoid problems from connection coalescing, where the client re-uses a connection for different routes that use the same certificate.

That said, it is not immediately obvious where http/2 is set to be default for Open Shift 4.12, or the Keycloak ingress itself. :shrug: this one took quite a while to track down and figure out, since the symptom was traffic randomly getting sent to the wrong pod (Keycloak).

Ok, moving on.

Now that your Keycloak instance is deployed into your namespace, grab the password:

❯ oc -n keycloak get secret example-keycloak-initial-admin -o jsonpath='{.data.username}' | base64 --decode ; echo

admin

❯ oc -n keycloak get secret example-keycloak-initial-admin -o jsonpath='{.data.password}' | base64 --decode ; echo

<redacted>

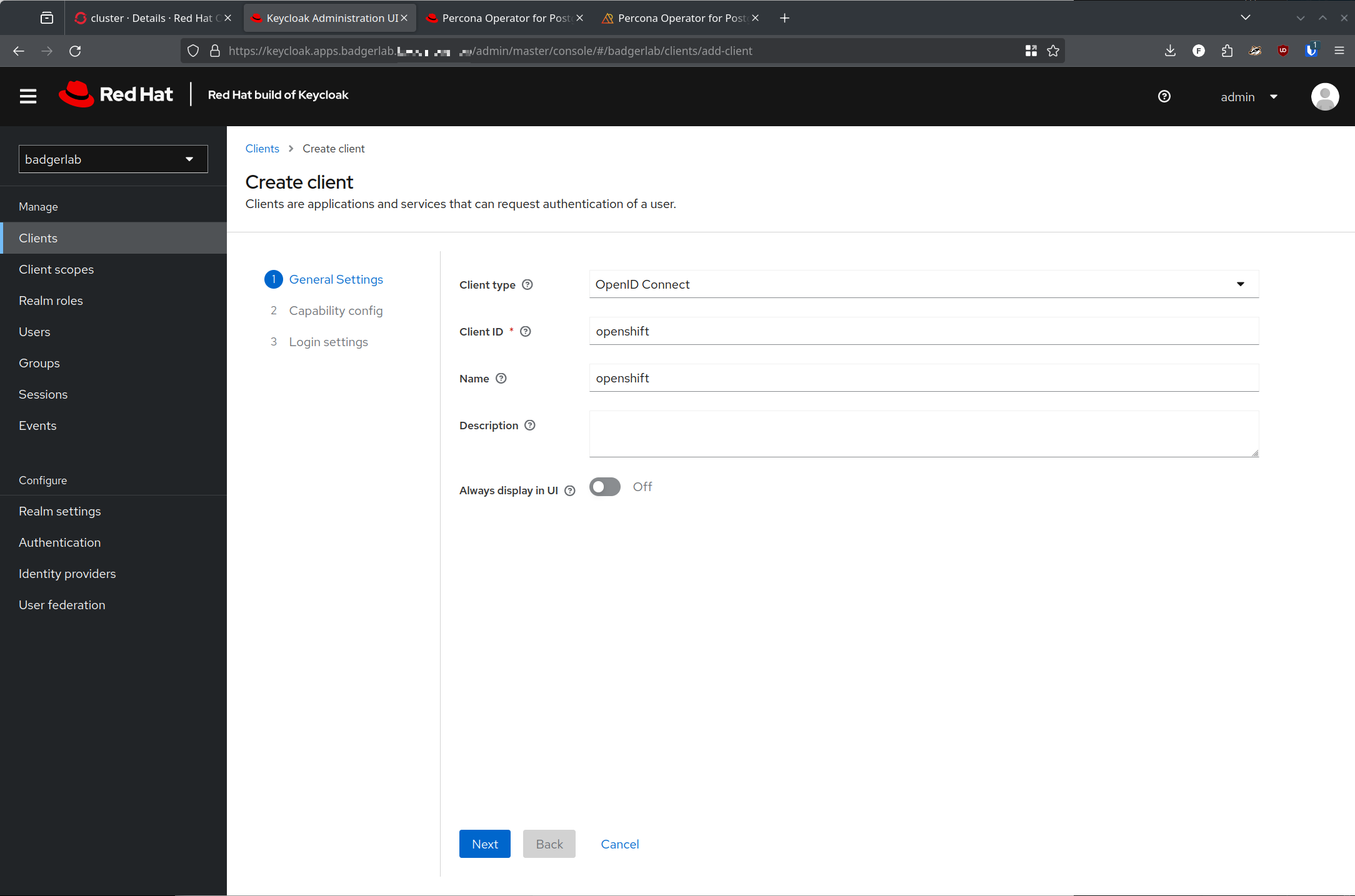

If you're not familiar with Keycloak - it can be quite complicated to get going initially; which is the point of this blog post - then you'll want to refer to the Keycloak Server Admin docs, specifically the Realm creation/configuration section. You don't want to use the master Realm for your internal apps. I created a new realm called badgerlab to create my Open Shift client.

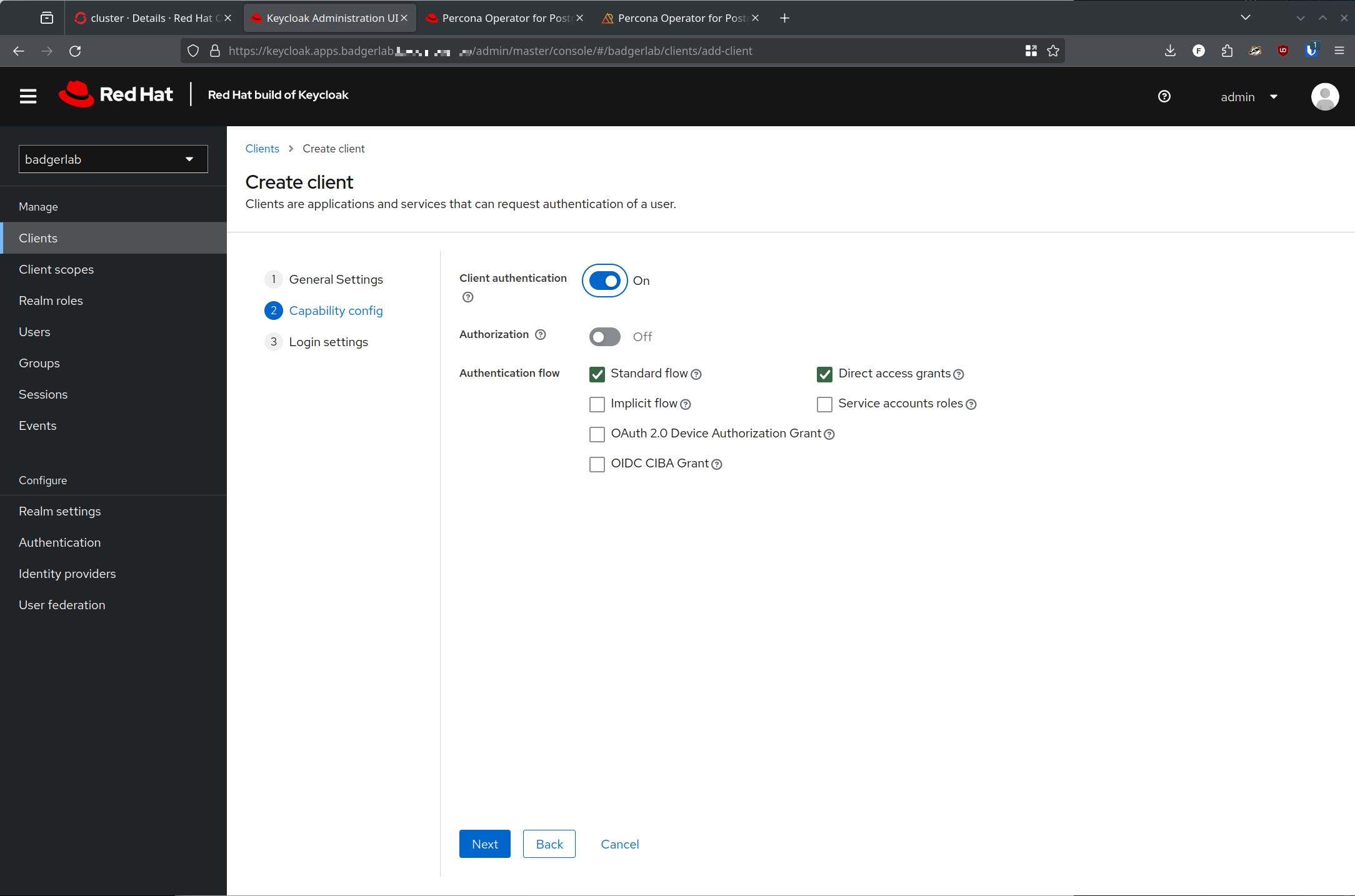

Now, create a new client in that new realm:

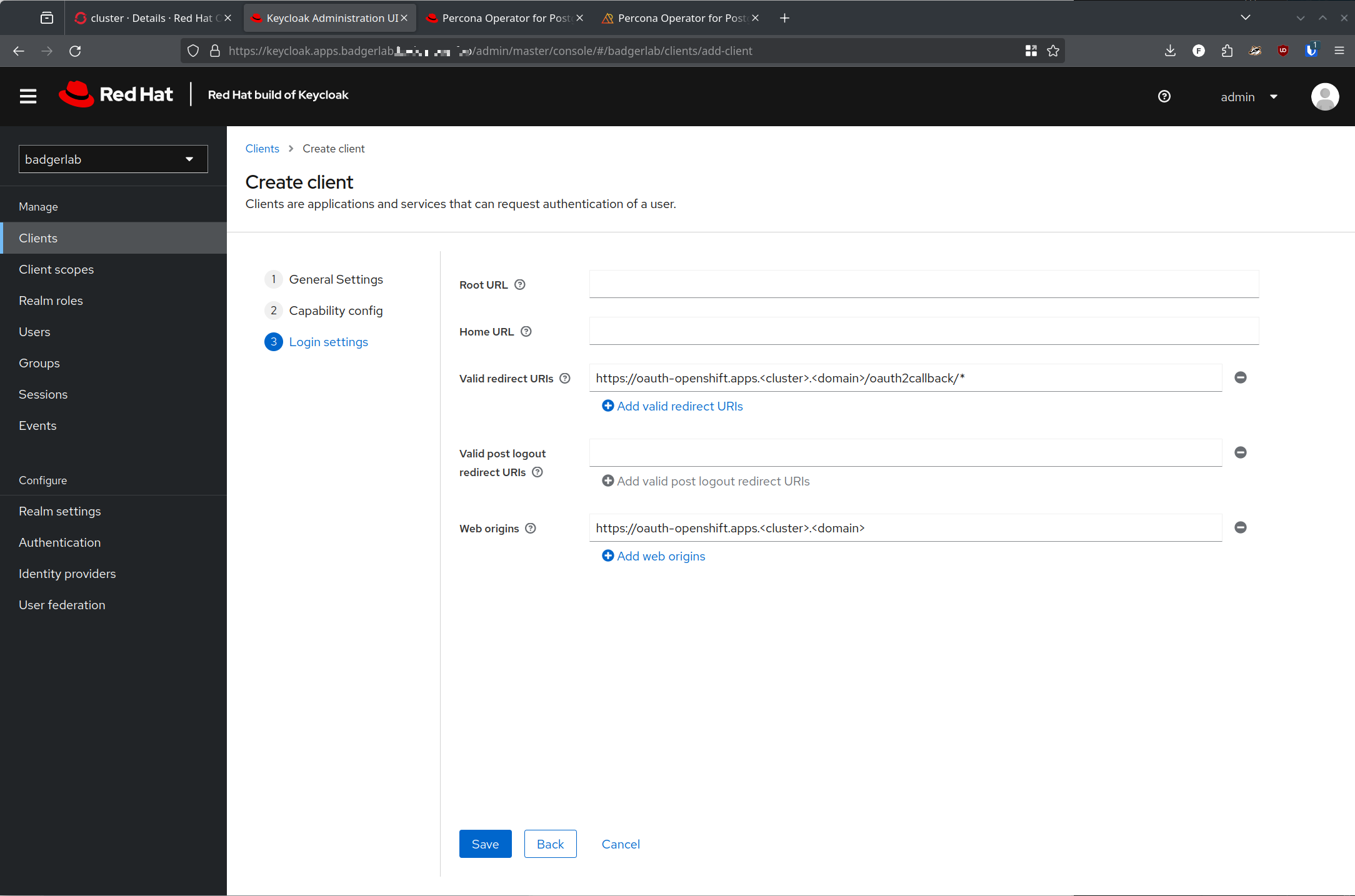

One huge piece of confusion that I had was on what redirect URI's would be required for this - again, something that is not well documented and I am unfortunately not very familiar with.

https://oauth-openshift.apps.<cluster>.<domain>/oauth2callback/*

Thats it. That's the one redirect URI you need.

The Web Origin URL should be https://oauth-openshift.apps.<cluster>.<domain>

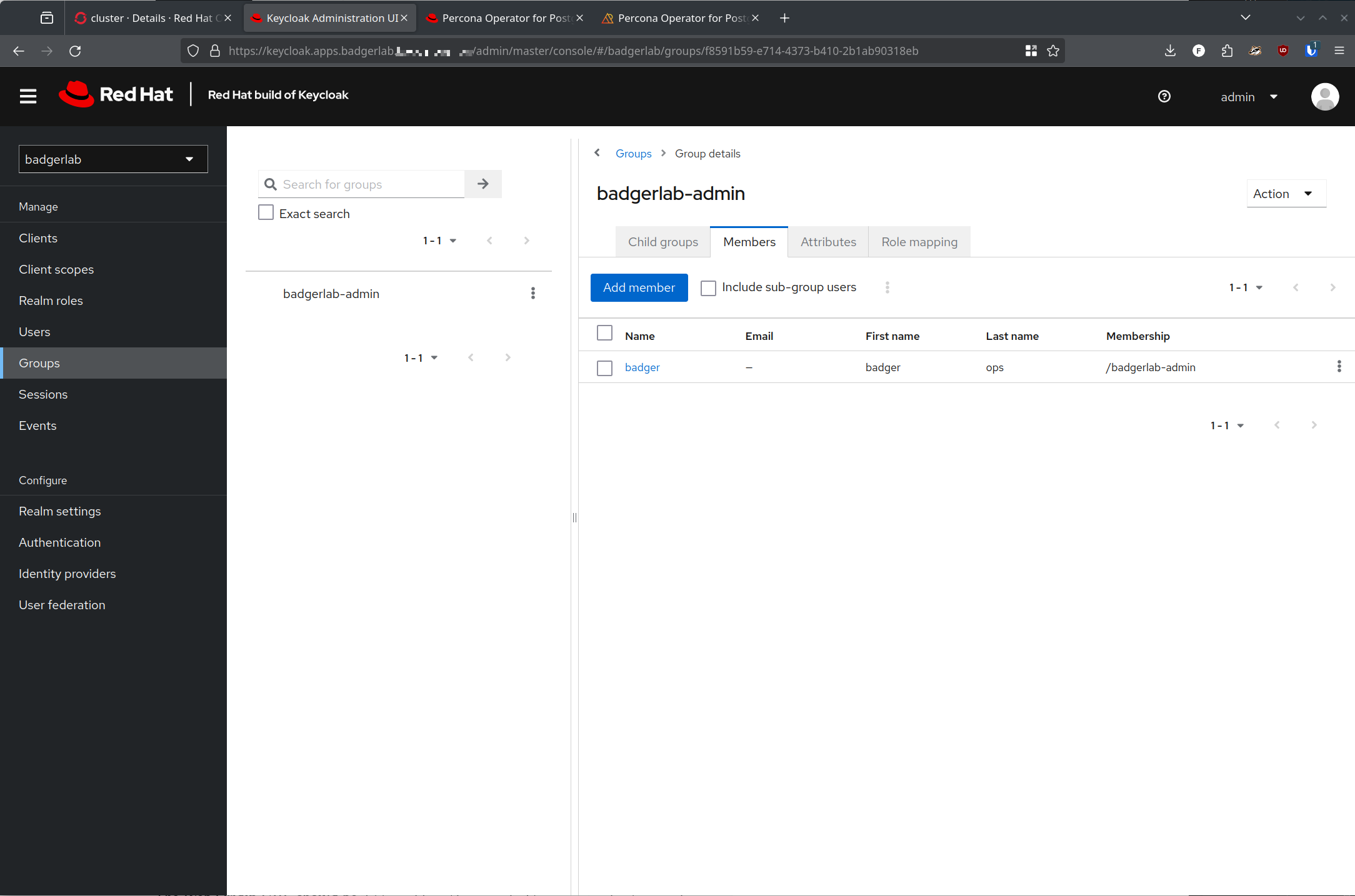

Now that your client is created you can create users and groups! For clarity, I'm creating a single user and a single group.

Cool, we're all set on the Keycloak side now! (Ok, mostly - that is a teeny white lie, but lets let it play out)

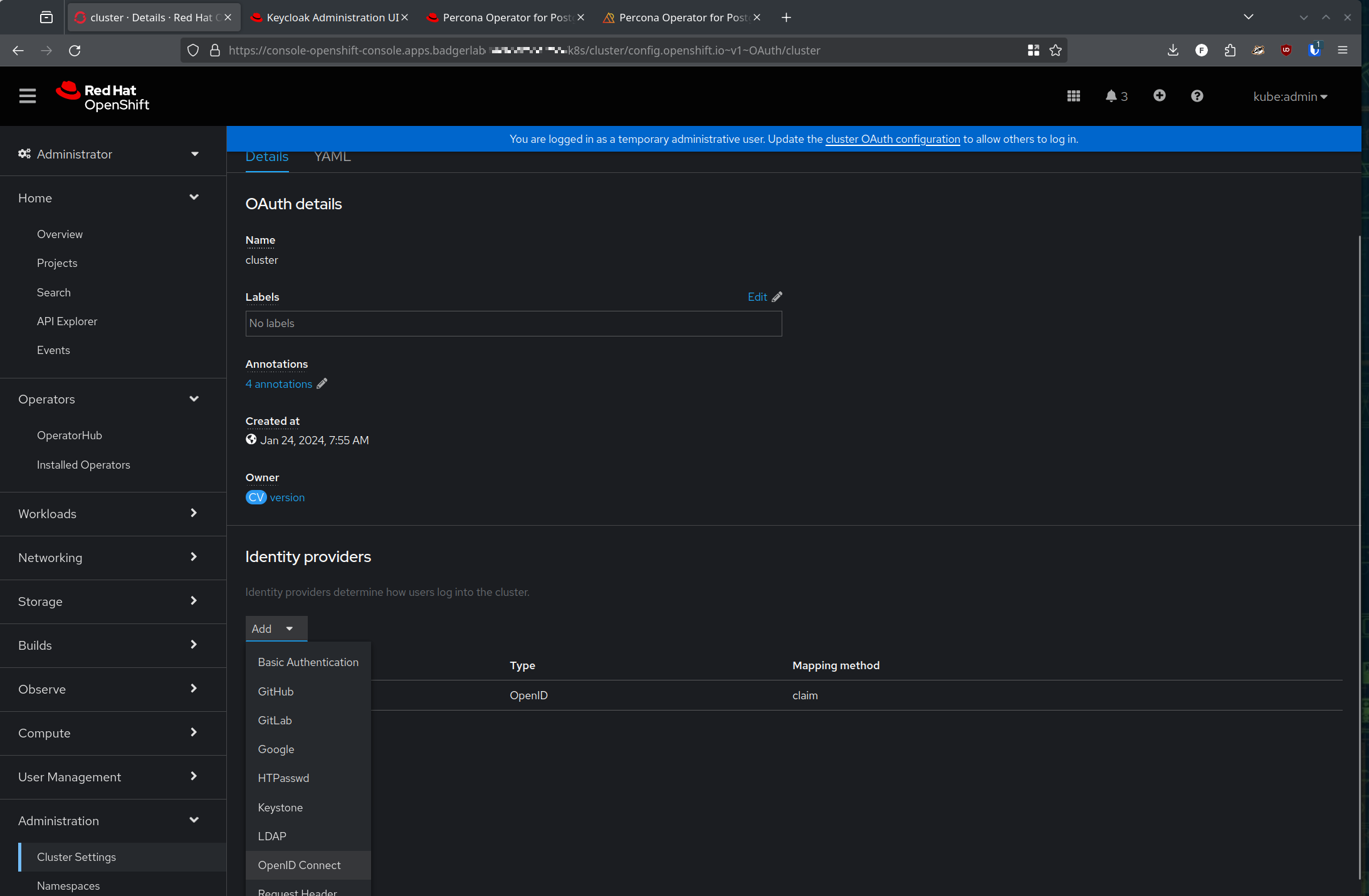

Setup on the Open Shift side is more straightforward, we can follow along with the docs to configure what we created on the Keycloak side.

There are a couple of ways to configure an oauth provider on the Open Shift side, but if you're logged in as kubeadmin you'll have a nice blue banner with a convenient hyperlink to click

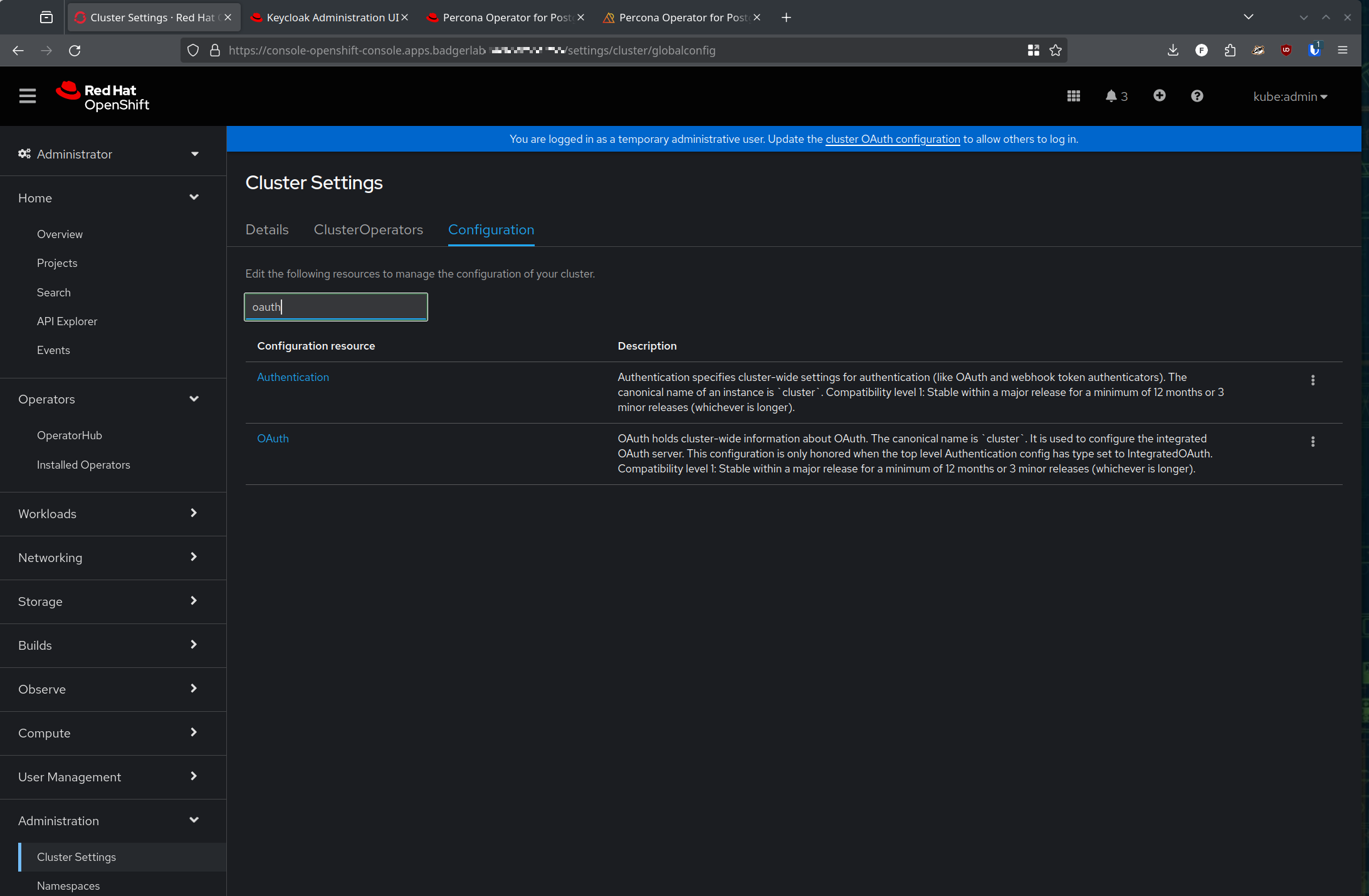

Or, you can go to Administration -> Cluster Settings -> Configuration and search for 'Oauth'

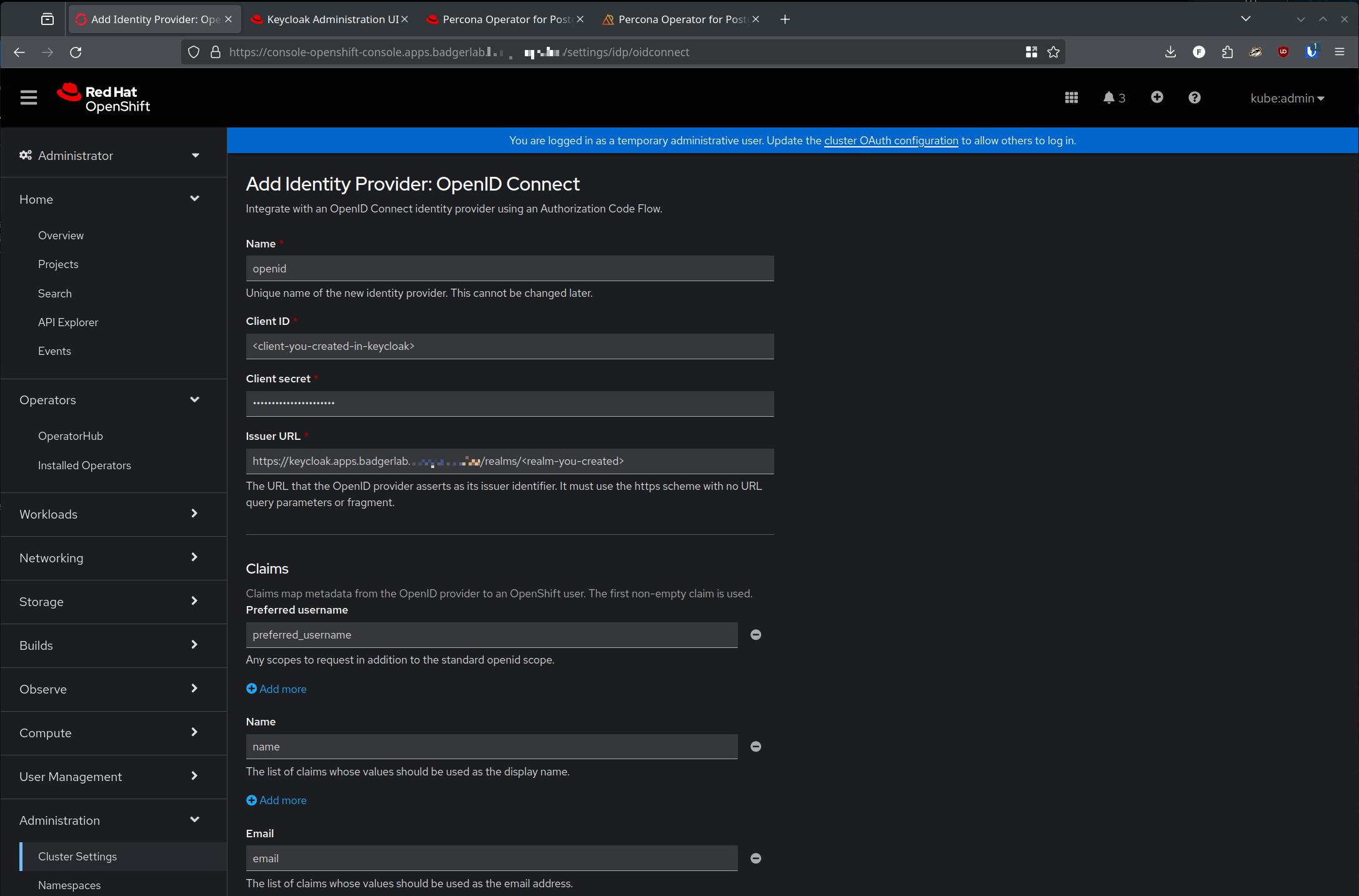

Either way, add a new OpenID connect Identity Provider (IDP)

Optionally, you can create a secret in the openshift-config namespace, with clientSecret as the key, and your client secret from Keycloak as the value, then use the following yaml structure to manually create an Oauth config:

spec:

identityProviders:

- mappingMethod: claim

name: openid (whatever name you choose!)

openID:

claims:

email:

- email

groups:

- groups

name:

- name

preferredUsername:

- preferred_username

clientID: openshift

clientSecret:

name: <secret you created>

extraScopes: []

issuer: 'https://keycloak.apps.<cluster>.<domain>/realms/<your realm>'

type: OpenIDNote: the issuer is just the URL to your Keycloak realm - you can easily find it by going to realm settings in Keycloak, then clicking the 'OpenID endpoint configuration' hyperlink, that will return the full .well-known/openid-configuration url that Open Shift needs. You'll need to just remove the .well-known/openid-configuration suffix and use the rest of the url with no trailing slash.

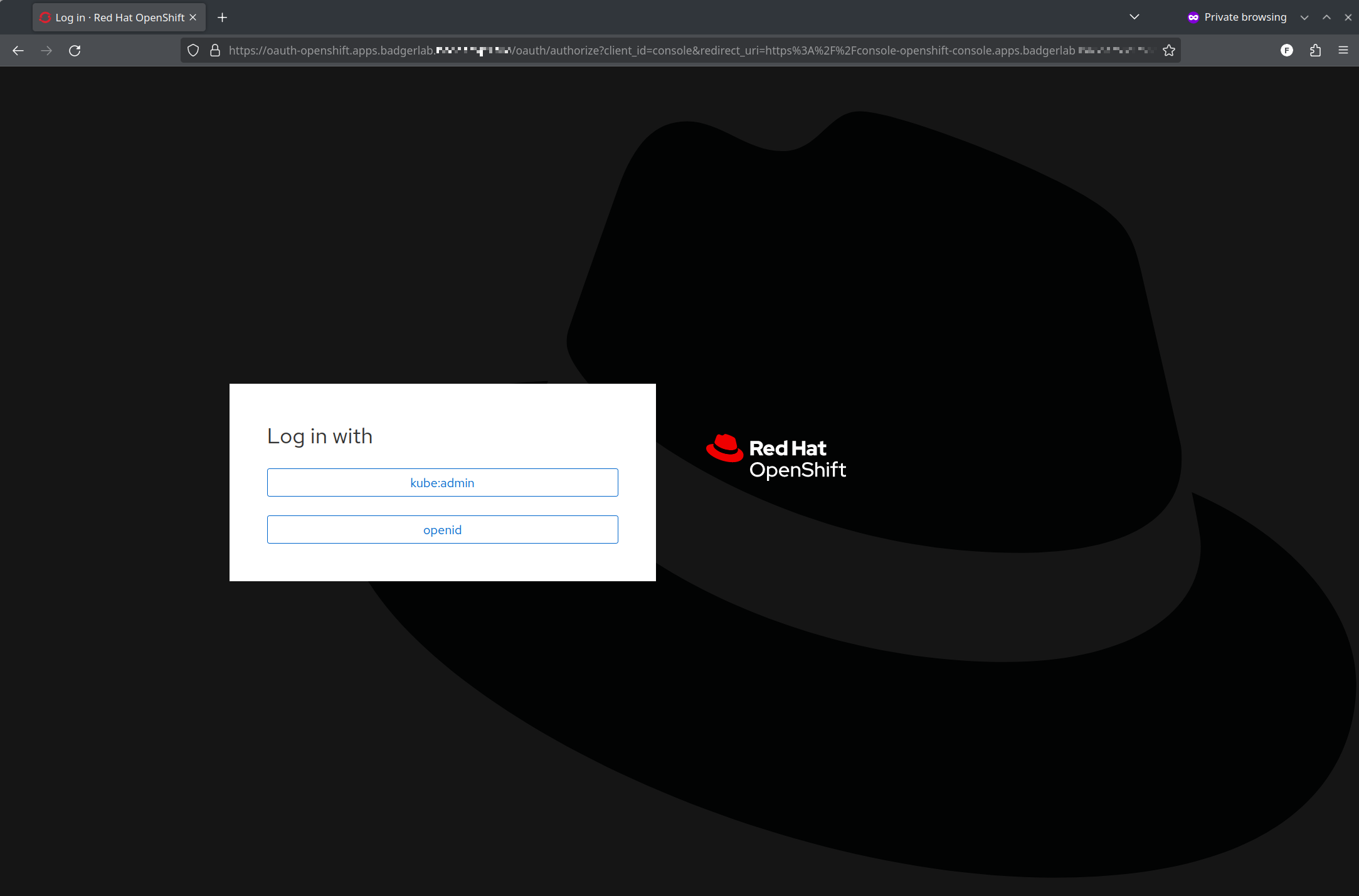

Ok, now that is all configured, you should be able to either log out of kubeadmin, or open a incognito tab/new browser window (recommended - keep your kubeadmin session open!) to test this out!

Going back to the console URL you should now see a new login button for openid, or whatever you named it:

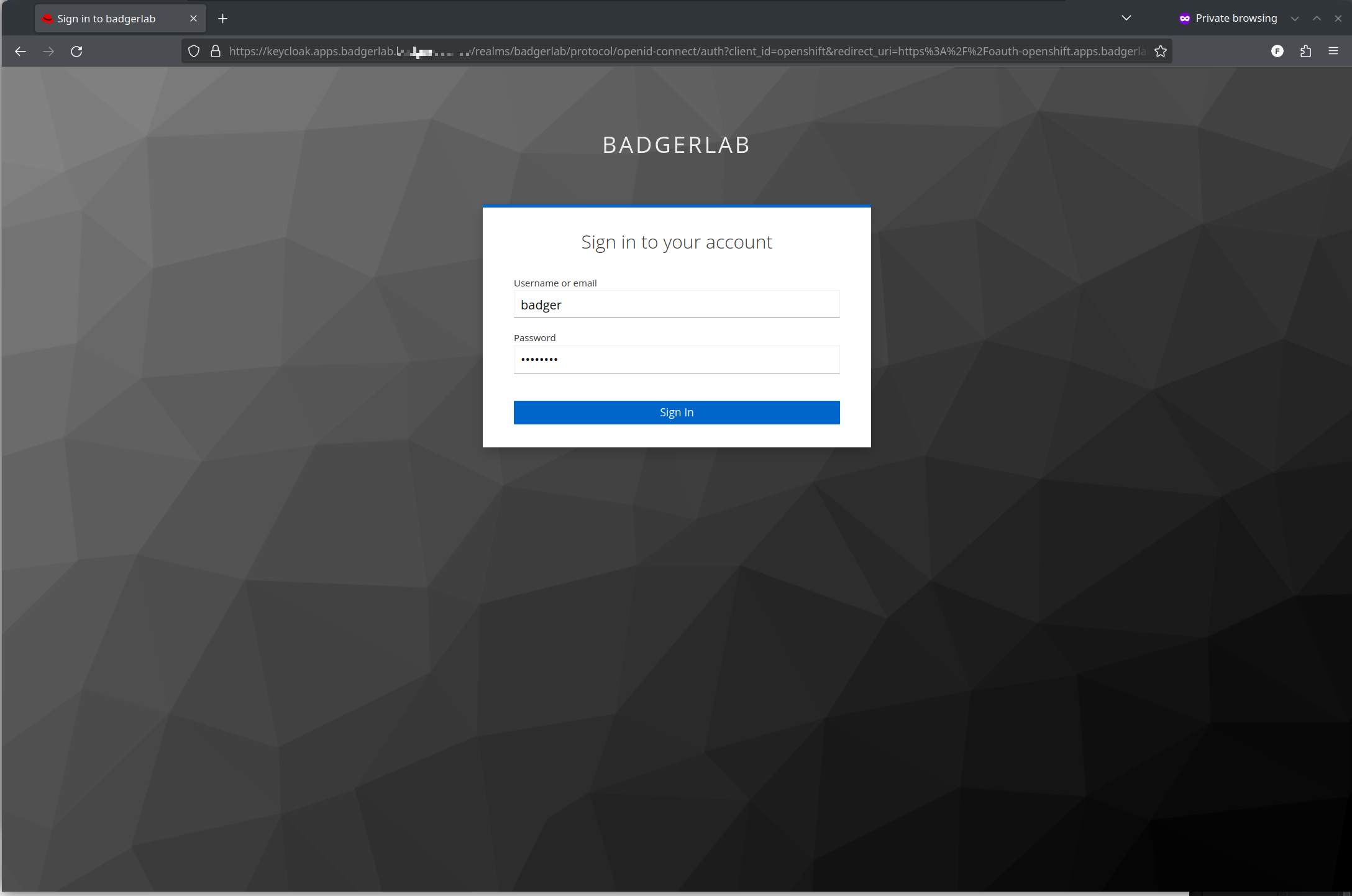

Lets select openid:

This will be your Keycloak page loading, with the realm name you set, and any theme you want to set on Keycloak visible here

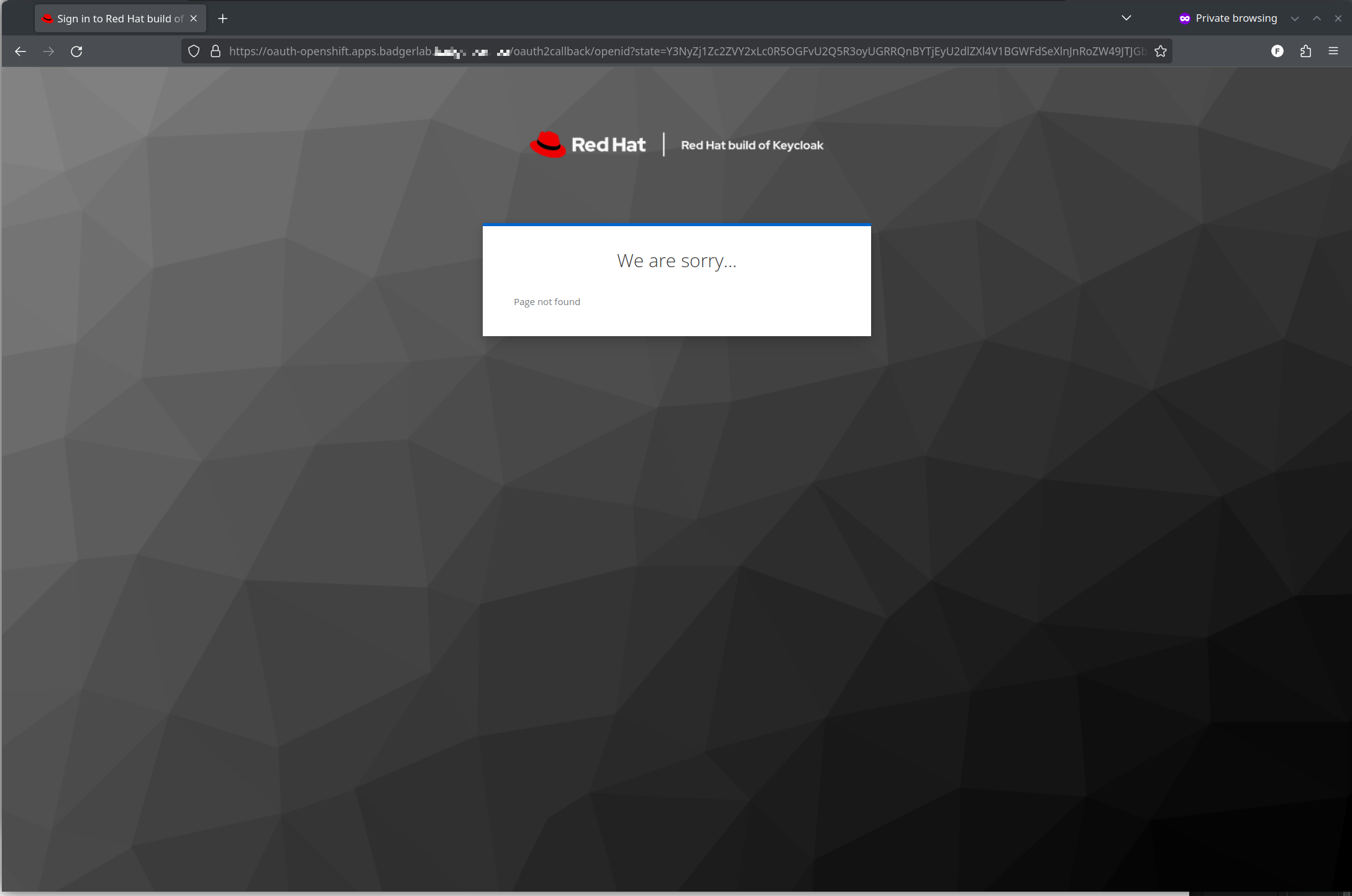

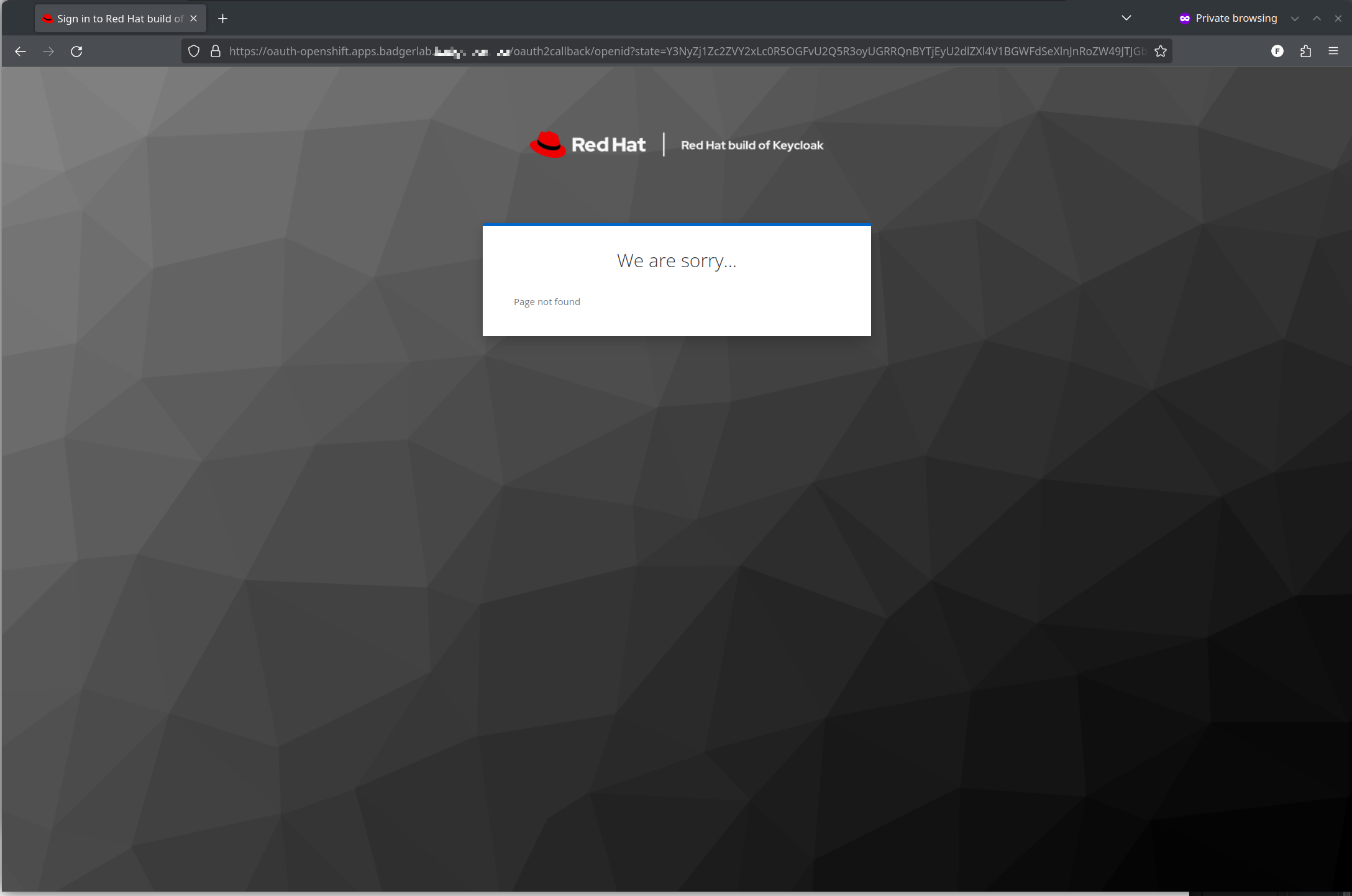

Oh no?!? What the heck is this? You may or may not see this screen. I'll explain in a minute, just do a hard refresh ( ctrl + shift + r ) once or twice and it should load the console

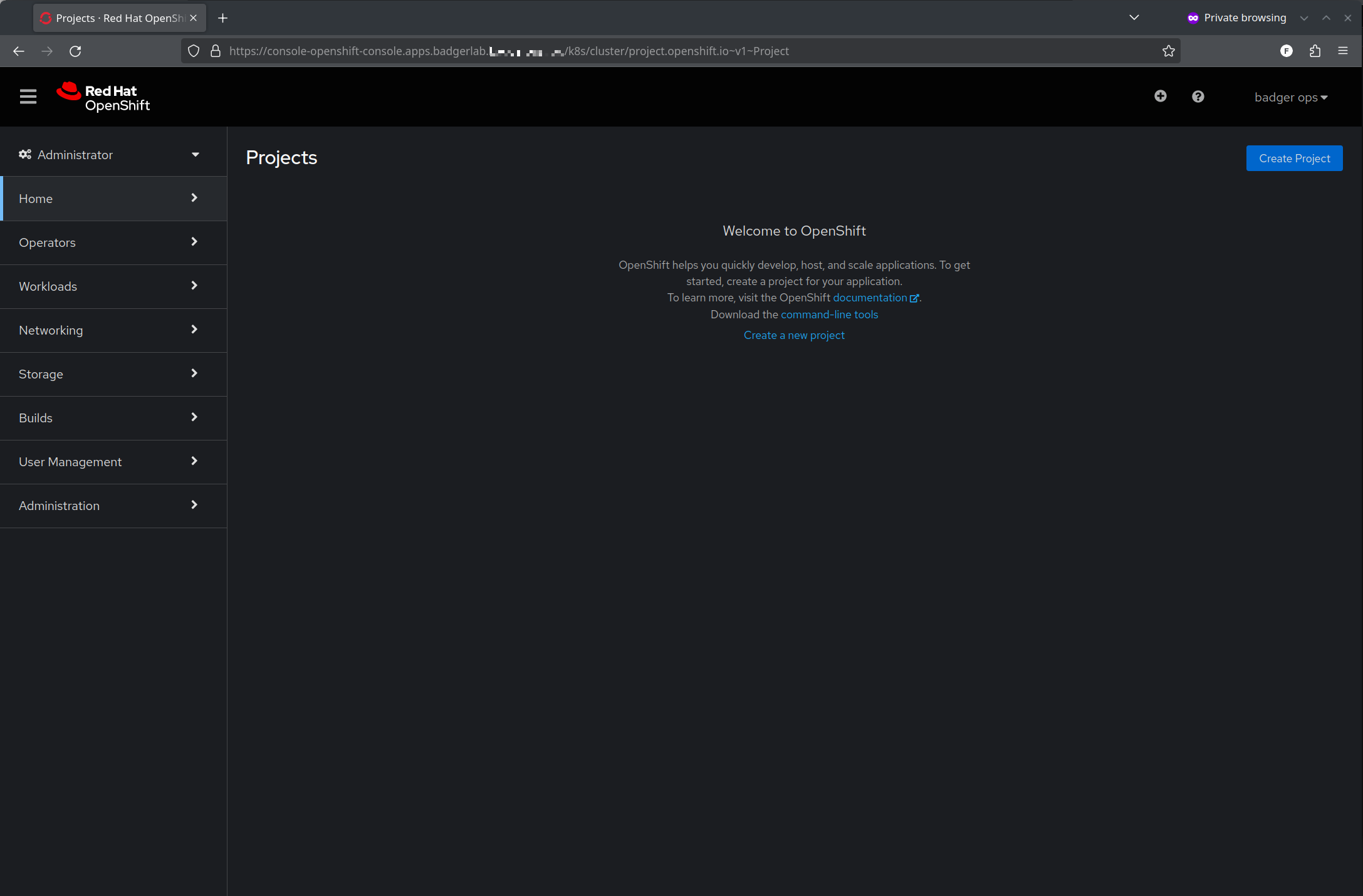

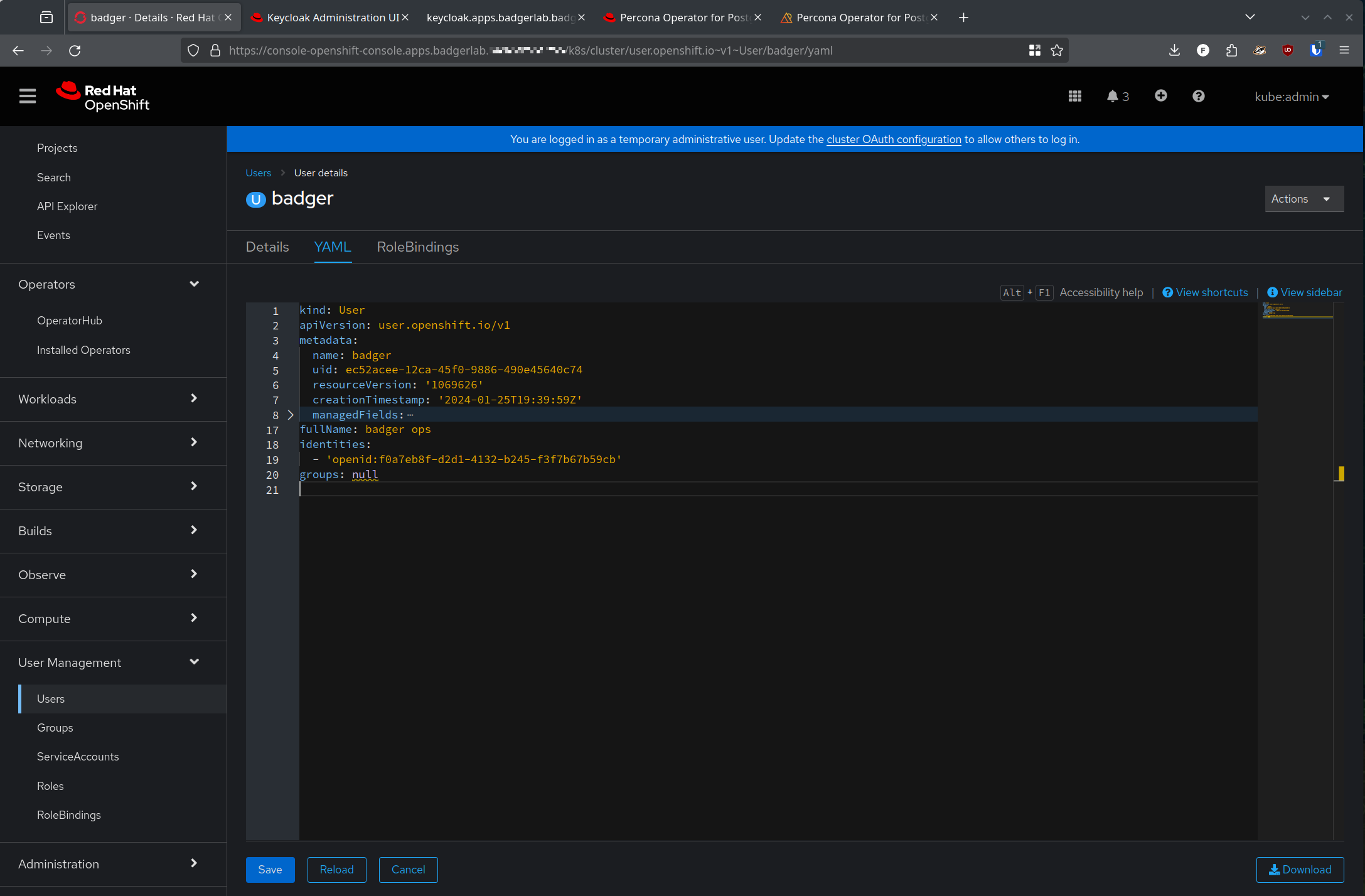

As an authenticated but not authorized user, you won't see anything in the cluster, but if we swap back to our kubeadmin session, we'll see a new user:

Note the identities section where we see openid:<guid> for the user, and the name, fullname, etc are sync'd over from Keycloak.

Ok, we're on the right track. Now, how do we give the user access to do stuff?

Here is where we will use the RBAC docs to map the group we created in Keycloak to a Role in Open Shift.

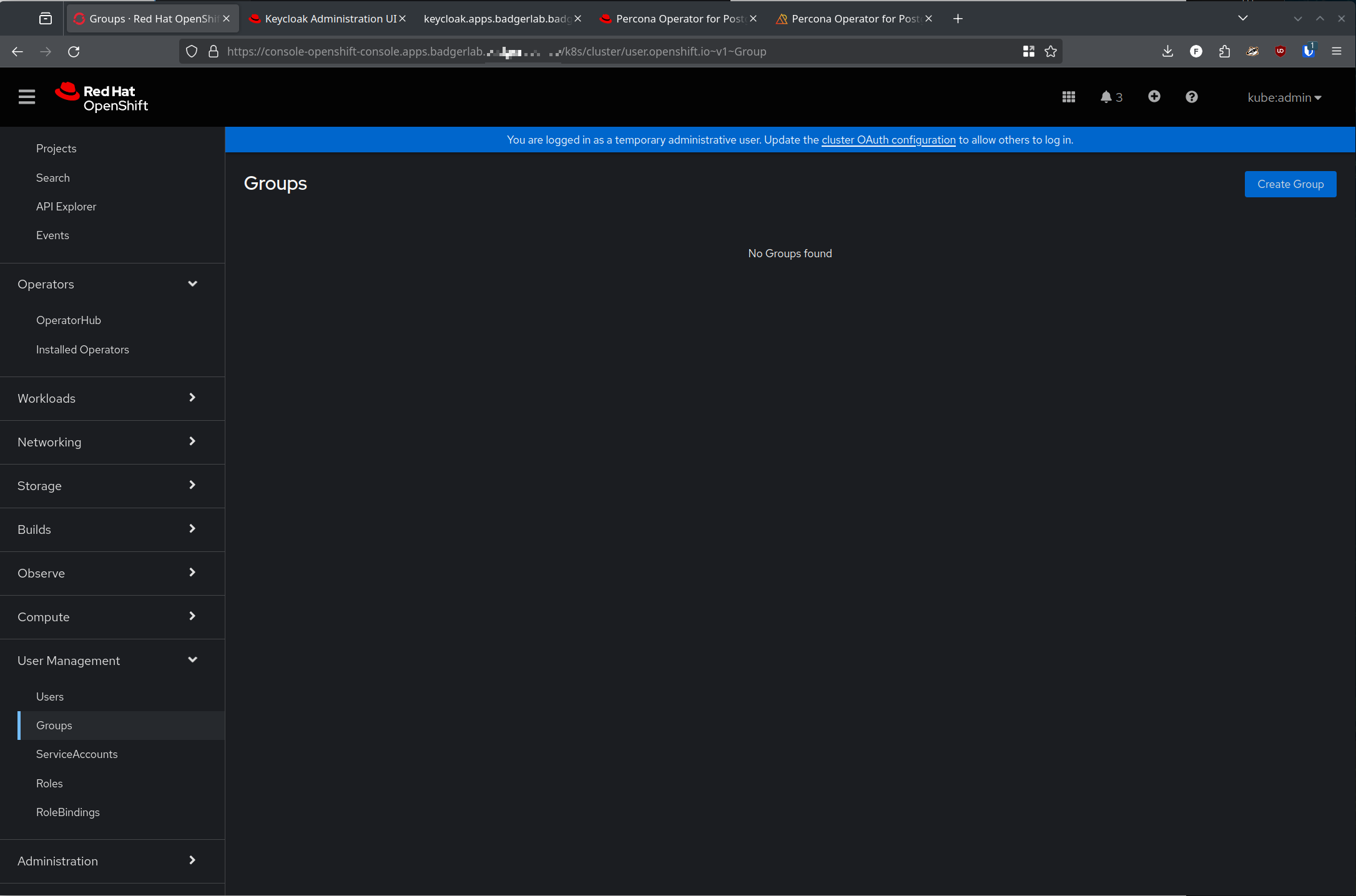

But first, where is that group? We know we see the user sync'd over from Keycloak, but I would expect the group I created and added to my user to also sync over, but alas, there is nothing there!

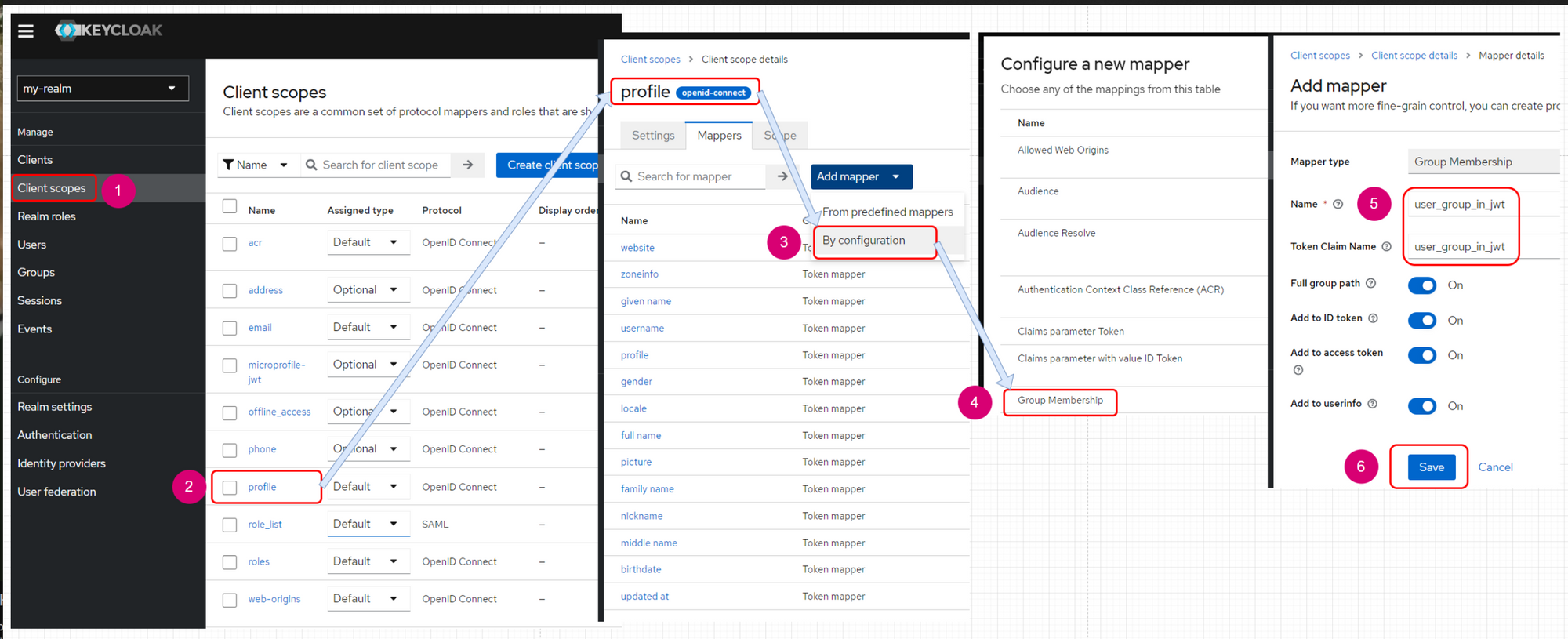

This one hung me up and had me very frustrated for a while until I stumbled across this stackoverflow post. The stackoverflow user Bench Vue provided a wonderful step-by-step sequence of screenshots to ensure that Keycloak adds the user groups into the Java Web Token (JWT) that is sent to the client, which in our case is Open Shift.

Heading back to our Keycloak page, we navigate to:

- our Realm we created

- Client Scopes

- Profile

- "Add Mapper"

- "Group Membership"

- set name to

user_group_in_jwt- UNSELECT 'full group path' or else the group will have a preceding

/which makes Open Shift error out very badly

- UNSELECT 'full group path' or else the group will have a preceding

Stackoverflow article screenshot here for posterity:

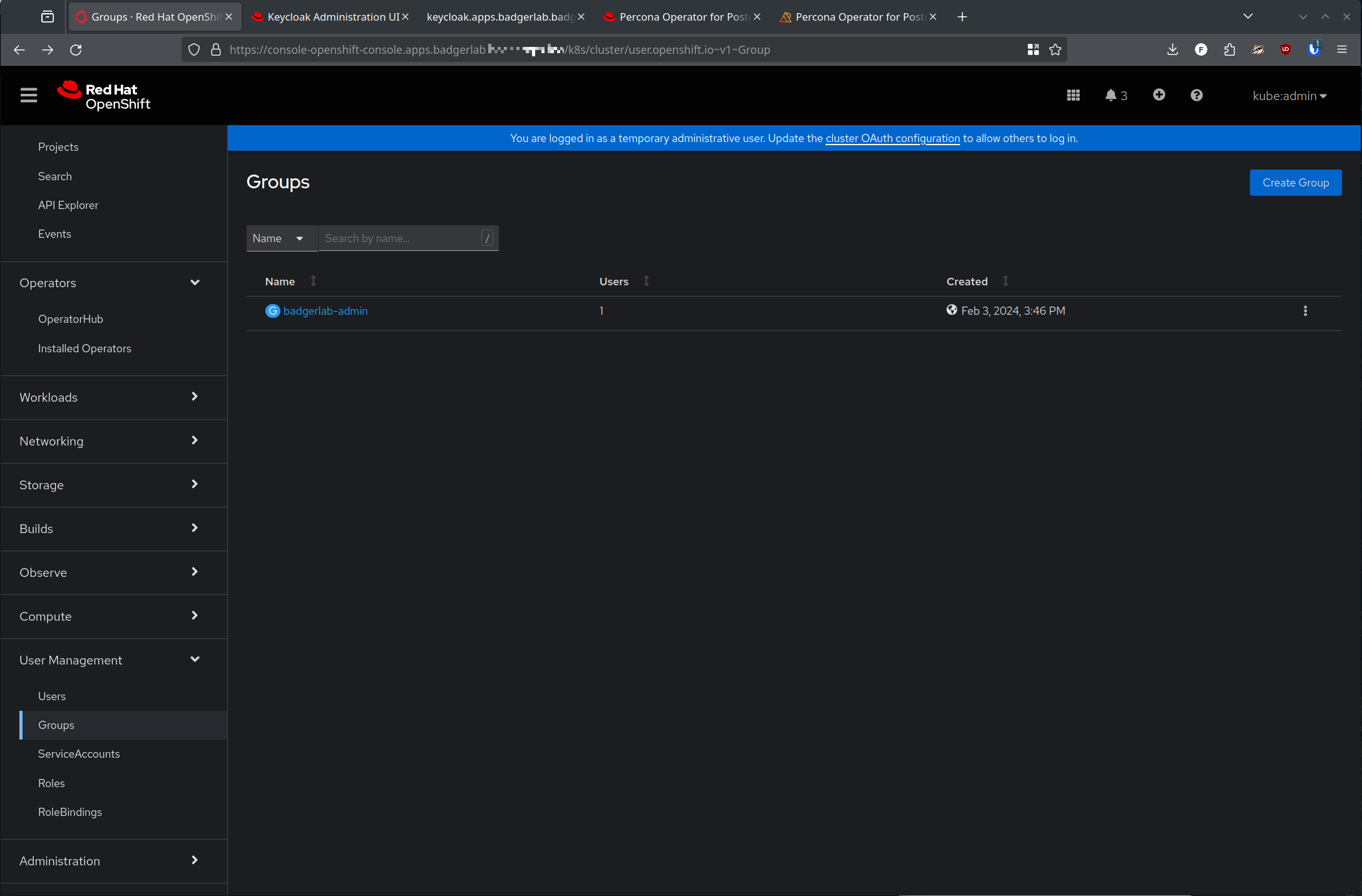

With this added, log out of your user session in Open Shift, and log back in. Now you should be able to check to see the group is sync'd over in your kubeadmin Open Shift session:

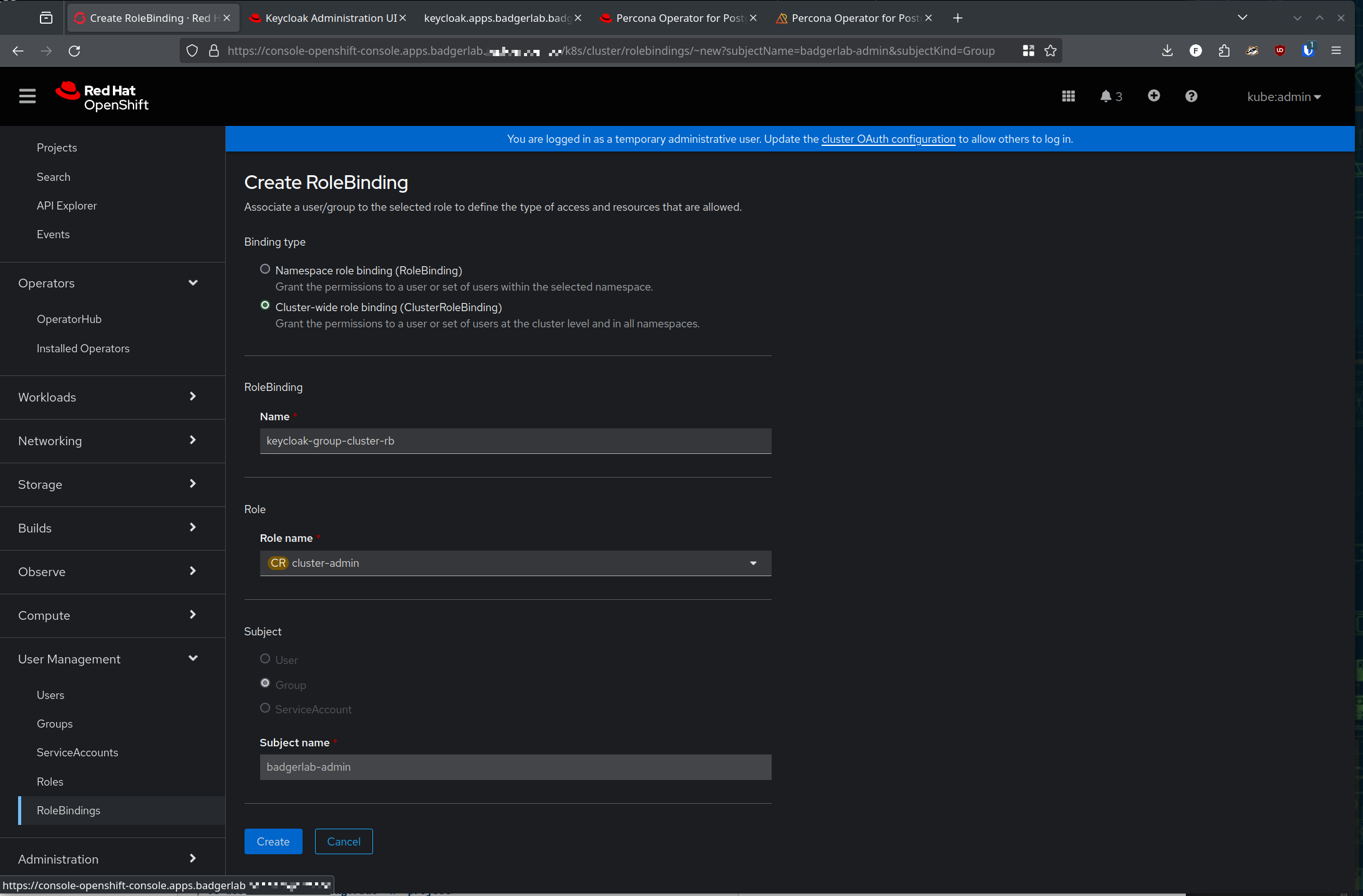

So, we can click into that group that is synced over from Keycloak, swap to the 'rolebindings' tab and click "create binding". We'll make this a cluster rolebinding, and give ourselves the cluster-admin role:

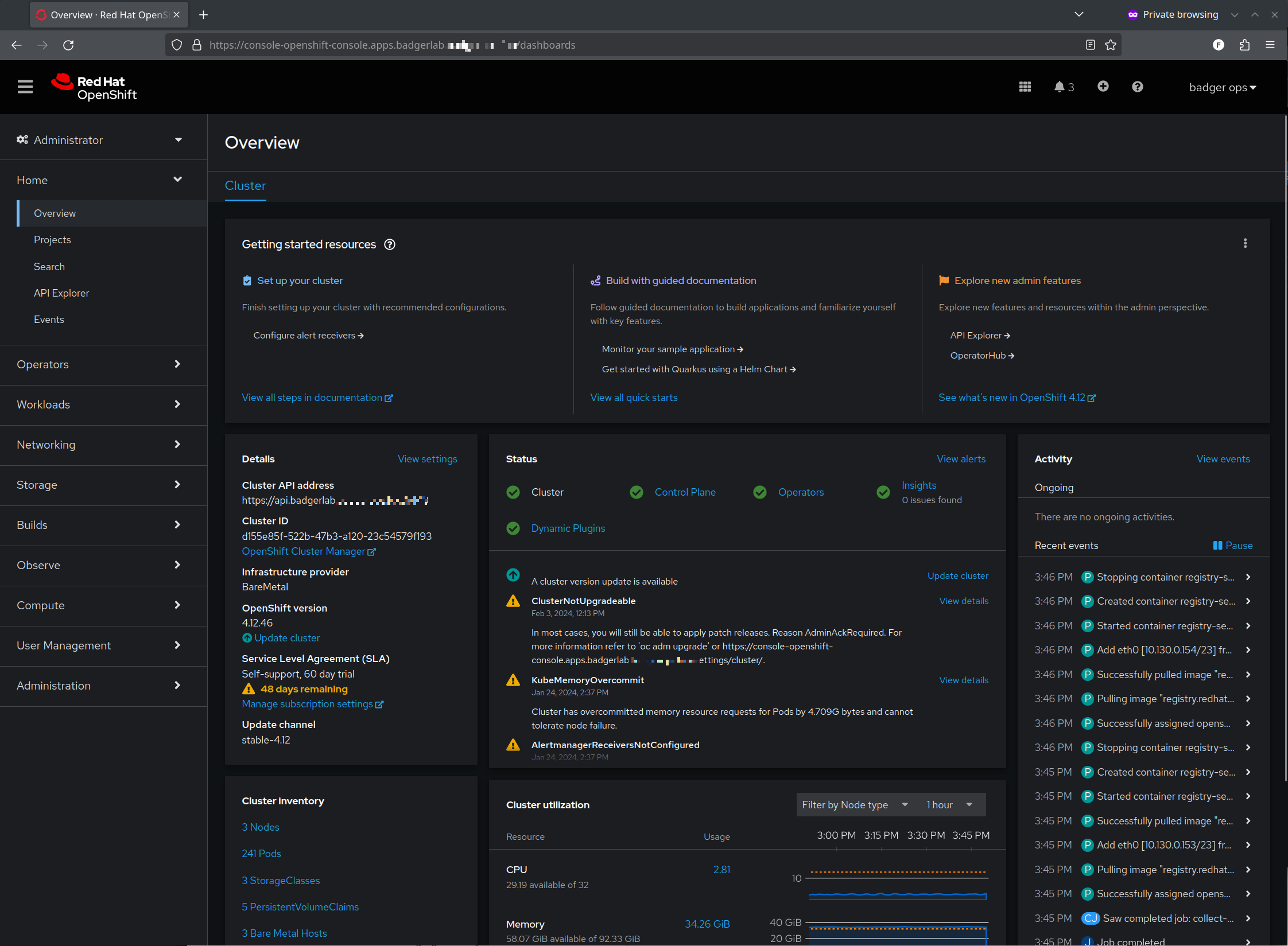

Once we click 'create' we can swap back to our user session and refresh, we should see that we're now cluster admin's with the ability to see everything:

Note, this is a small 3 node cluster running on some old laptops. It is wildly underpowered and Open Shift likes to complain about that, ha!

So now at ~1,600 words in I'm about ready to wrap it up.

But Wait! What about that weird "We are sorry..." screen that came up?

Remember 25 minutes when I was saying something about http/2 connection reuse? And that I re-used the *.apps.<cluster>.<domain> certificate for this Keycloak deployment?

As it turns out, that causes a weird problem where Open Shift SDN will sometimes, but not always, randomly send traffic meant for the Open Shift Console to the Keycloak pod, which quite correctly states "I don't know what to do with this? Sorry?"

There are a couple 'correct' fixes here. We can set tls: reencrypt on the ingress, or create a new certificate keycloak.<whatever> and set our ingress to use that instead of re-using the same *.apps.<cluster>.<domain> certificate.

Which frankly, is a little annoying since the whole Open Shift "Hey, everything lives under the *.apps.<cluster>.<domain> path and "just works" - uh, that is, until it doesn't.What I ended up doing in our Production environment was creating a new certificate and using that, along with a slightly different Keycloak DNS path keycloak.<region>.<domain> - and set an 'A' record pointing to the same IP that *.apps.<cluster>.<domain> used for that Keycloak DNS entry. That works.

So that's it, that's the blog post. I hope that it helps you figure out how to deploy Keycloak as an IDP for Open Shift using Oauth, and setting up RBAC mapping for your Keycloak Group(s) to Open Shift Roles. I may write a more in depth blog post on that in the future as I learn more in that area.

As always, feel free to hit me up on twitter @badgerops or mastodon.social/@badgerops if you have pointers, questions or corrections.

Cheers!

-BadgerOps